In this account, Stapel describes how he came to leave theater for social psychology, how he had some initial fledgling successes, and ultimately, how his weak results and personal greed drove him to fake his data. A common theme is the complete lack of scientific oversight -- Stapel refers to his sole custody of the data as being alone with a big jar of cookies.

Doomed from the start

Poor Stapel! He based his entire research program on a theory doomed to failure. So much of what he did was based on a very simple, very crude model: Seeing a stimulus "activates" thoughts related to the stimulus. Those "activated" thoughts then influence behavior, usually at sufficient magnitude and clarity that they can be detected in a between-samples test of 15-30 samples per cell.Say what you will about the powerful effects of the situation, but in hindsight, it's little surprise that Stapel couldn't find significant results. The stimuli were too weak, the outcomes too multiply determined, and the sample sizes too small. It's like trying to study if meditation reduces anger by treating 10 subjects with one 5-minute session and then seeing if they ever get in a car crash. Gelman might say Stapel was "driven to cheat [...] because there was nothing there to find. [...] If there's nothing there, they'll start to eat dirt."

Remarkably, Stapel writes as though he never considered that his theories could be wrong and that he should have changed course. Instead, he seems to have taken every p < .05 as gospel truth. He talks about p-hacking two studies into shape (he refers to "gray methods" like dropping conditions or outcomes) only to be devastated when the third study comes up immovably null. He didn't listen to his null results.

However, theory seemed to play a role in his reluctance to listen to his data. Indeed, he says the way he got away with it for as long as he did was by carefully reading the literature and providing the result that theory would have obviously predicted. Maybe the strong support from theory is why he always assumed there was some signal he could find through enough hacking.

He similarly placed too much faith in the significant results of other labs. He alludes to strange ideas from other labs as though they were established facts: things like the size of one's signature being a valid measure of self-esteem, or thoughts of smart people making you better at Trivial Pursuit.

Thinking-Seeing-Doing Theory

Reading the book, I had to reflect upon social psychology's odd but popular theory, which grew to prominence some thirty years ago and is just now starting to wane. This theory is the seeing-thinking-doing theory: seeing something "activates thoughts" related to the stimulus, the activation of those thoughts leads to thinking those thoughts, and thinking those thoughts leads to doing some behavior.Let's divide the seeing-thinking-doing theory into its component stages: seeing-thinking and thinking-doing. The seeing-thinking hypothesis seems pretty obvious. It's sensible enough to believe in and study some form of lexical priming, e.g. that some milliseconds after you've just showed somebody the word CAT, participants are faster to say HAIR than BOAT. Some consider the seeing-thinking hypothesis so obvious as to be worthy of lampoon.

But it's the thinking-doing hypothesis that seems suspicious. If incidental thoughts are to direct behavior in powerful ways, it would suggest that cognition is asleep at the wheel. There seems to be this idea that the brain has no idea what to do from moment to moment, and so it goes rummaging about looking for whatever thoughts are accessible, and then it seizes upon one at random and acts on it.

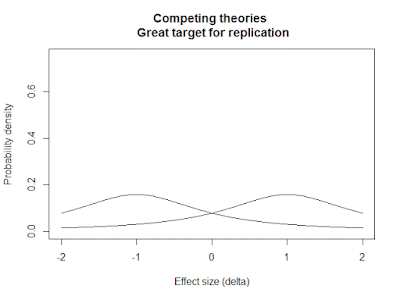

The causal seeing-thinking-doing cascade starts to unravel when you think about the strength of the manipulation. Seeing probably causes some change in thinking, but there's a lot of thinking going on, so it can't account for that much variance in thinking. Thinking is probably related to doing, but then, one often thinks about something without acting on it.

The trickle-down cascade from minimal stimulus to changes in thoughts to changes in behavior would seem to amount to little more than a sneeze in a tornado. Yet this has been one of the most powerful ideas in social psychology, leading to arguments that we can reduce violence by keeping people from seeing toy guns, stimulate intellect through thoughts of professors, and promote prosocial behavior by putting eyes on the walls.

Reflections

When I read Ontsporing, I saw a lot of troubling things: lax oversight, neurotic personalities, insufficient skepticism. But it's the historical perspective on social psychology that most jumped out to me. Stapel couldn't wrap his head around the idea that words and pictures aren't magic totems in the hands of social psychologists. He set out to study a field of null results. Rather than revise his theories, he chose a life of crime.The continuing replicability crisis is finally providing some appropriately skeptical and clear tests of the seeing-thinking-doing hypothesis. In the meantime, I wonder: What exactly do we mean when we say "thoughts" are "activated"? How strong is the evidence is that the activation of a thought can later influence behavior? And are there qualitative differences between the kind of thought associated with incidental primes and the kind of thought that typically guides behavior? The latter would seem much more substantial.